Digitalization in manufacturing

Production is one of the most heavily optimized industrial sectors, and for good reason. Avoidable scrap or machine downtimes not only consume time and nerves but, above all, a significant amount of money. To prevent this, companies organize use digital systems to organize and execute their manufacturing processes. For this purpose, they often rely on Manufacturing Execution Systems (MES). Recently, another term has gained increased attention: Manufacturing Operations Management, abbreviated as MOM.

This blog post explains how MES and MOM are related and what to consider when choosing an MES.

What is MES?

MES is software that helps manufacturing companies organize their production. Initially, sales planning is carried out and corresponding production orders are created in the Enterprise Resource Planning (ERP) system. Subsequently, the production department uses the MES to execute these orders.

In the MES, it is determined who will execute which production order and which resources and tools they will use. During production, employees manually enter operational data into the system and therefore supplement the automatically collected data from machine controls and sensors. To ensure product quality, the MES enables planning and documentation of quality inspections.

The MES thus creates transparency within the production department. Finally, employees report completed orders back to the ERP system, triggering logistical and commercial follow-up processes.

What is MOM?

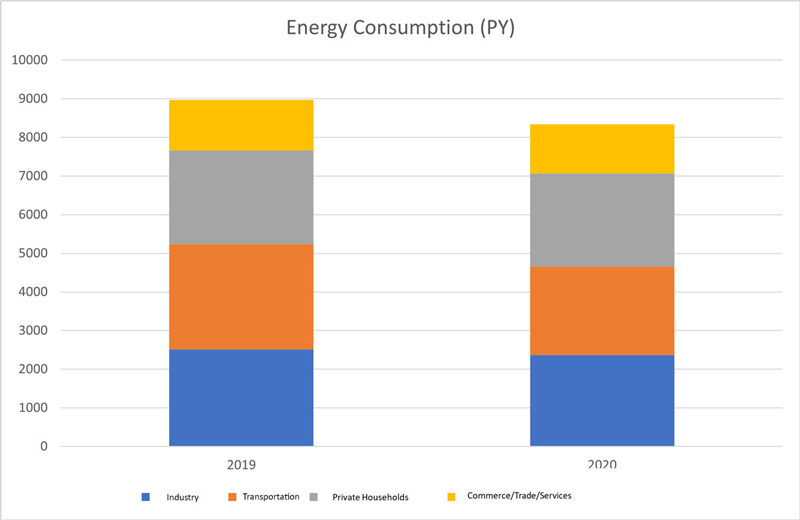

Manufacturing Operations Management (MOM) is a holistic concept with the goal of optimizing the overall value chain process. Companies achieve this by digitally managing their manufacturing processes and transparently providing manufacturing-related information across multiple departments. Production processes are considered an integral part of cross-departmental business processes. To ensure seamless communication from the manufacturing to the management level, information exchange between different IT system domains is essential. This includes, for example:

- Product Lifecycle Management (PLM) for product development and planning work steps in production,

- Enterprise Resource Planning (ERP) for sales planning and commercial order processing,

- Manufacturing Execution Systems (MES) for executing production orders,

- Quality Management Software (QMS) to ensure product quality,

- Industrial Internet of Things (IIoT) platform to consolidate data from machine controls and sensors and monitor manufacturing processes in real-time.

The interaction of IT systems makes collaborating between different departments and teams more efficient, positively impacting the entire value chain process. Production operates at lower manufacturing costs and can ensure shorter delivery times and high product quality. By integrating production processes into the overall value chain process through the holistic MOM approach, companies can adapt quickly and flexibly to changing market situations.

How do MES and MOM differ?

MES is an important component of the MOM approach. As shopfloor software, it primarily focuses on executing tasks and processes within production. MOM, on the other hand, describes the overarching concept that integrates production processes into the business processes of the overall value chain. The approach aims to optimize the value chain by coordinating information across various departments. The concept includes not only the execution level (MES functions) but also adjacent functions from areas such as ERP, PLM, QMS, and IIoT.

What to consider when choosing an MES?

The challenge in selecting MES software is ensuring that it fits the company’s manufacturing structure and corresponding needs. For example, process manufacturing often requires recipe management, while discrete manufacturing involves working with bills of materials.

Furthermore, it is crucial to focus on the seamless integration of the system into the current IT infrastructure, encompassing elements such as PLM, ERP, QMS, and IIoT platforms. Following the MOM approach, maintaining cross-departmental information consistency significantly improves overall efficiency.

Companies should consider the following aspects:

- Expandability

Depending on the project scope, initially rolling out some basic MES functions minimizes project risks. Subsequently, it is possible to gradually add further functional areas until all relevant processes are integrated. For this approach, a modular software that grows step by step with the company’s needs is recommended. - Scalability

In addition to the functional expansion of an MES to cover more areas, it is relevant whether the solution can scale to all manufacturing locations. This requires support for the relevant languages and the ability to centrally consolidate and analyze local information. Ultimately, the MES provider must also be able to conduct implementation projects on a global scale. - Customizability

Production processes are as individual as the manufactured products. The better the MES supports the company’s processes and information needs, the greater the benefit. - Future-proofness

The economic resilience of the MES provider and their affinity to integrating new technologies, such as IIoT and artificial intelligence (AI), are crucial factors for the system’s long-term development. - User Experience (UX)

If the software is intuitive and well-designed, it avoids acceptance issues and the need for extensive training measures. The most feature-rich system might be worthless if end users do not use it correctly.

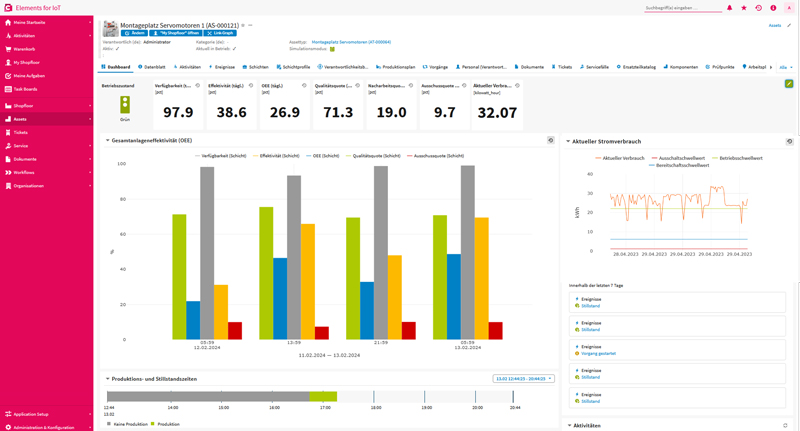

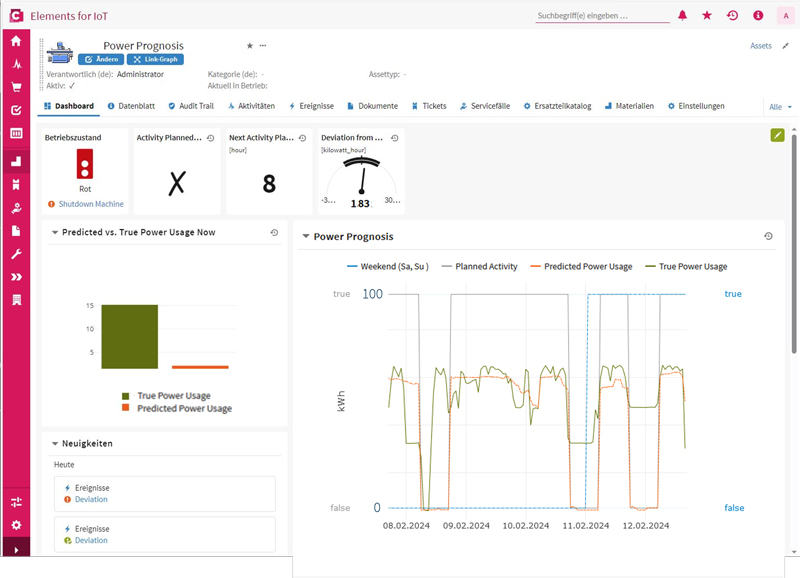

If you are looking for an MES for discrete manufacturing and want to follow the MOM approach, CONTACT Elements for IoT could be the right solution for you. This holistic manufacturing management system combines traditional MES functions with advanced maintenance management, energy monitoring, and seamless IT integration. The result: cost savings through reduced scrap and downtime and the integration of manufacturing into the overall value chain process.