Sustainability as a competitive edge: one step ahead with PLM

Sustainable thinking is no longer a “nice-to-have” – regulations and customer demands have made it a central pillar of modern innovation. A growing number of companies are realizing that ecological responsibility and economic success can go hand in hand. This is especially evident in product development: where cost-effectiveness used to dominate, sustainability has emerged as another key factor.

The right balance between economic and ecological aspects

While cost and efficiency remain crucial, staying competitive in the future requires taking the environmental balance into account when making business decisions. The challenge lies in finding the right balance between economic performance and ecological responsibility. This is most successful when sustainability is considered from the very beginning – at the design stage – rather than at the very end.

Why the product development process is crucial

Around 80% of a product’s environmental impact is already determined during the development phase. Decisions about materials, manufacturing processes, energy use, and recyclability made during this stage play a decisive role. Leveraging reliable and transparent data in the decision-making process enables companies to lower the environmental impact of their products.

LCA vs. PCF: Two key terms briefly explained

Anyone involved in sustainable product development will inevitably encounter these two concepts:

- Life Cycle Assessment (LCA): The assessment of a product’s environmental impact throughout its lifecycle, from raw material extraction to disposal.

- Product Carbon Footprint (PCF): The environmental footprint of a product, expressed in CO₂ equivalents. The PCF is often part of a broader LCA.

Implementing sustainability directly in the PLM system

CONTACT’s sustainability solution allows this environmental data to be recorded and used directly in CIM Database PLM. This enables a systematic evaluation of materials, processes, and product structures. Whether entered manually or imported automatically from environmental databases, a product’s environmental impact can be analyzed and improved directly within the system.

Asset Administration Shell: a key to data exchange in the supply chain?

Sustainability is not a solo effort. Especially for complex products involving multiple suppliers, effective data exchange is crucial. This is where the concept of the Asset Administration Shell (AAS) comes into play – a standardized representation of digital twins for industrial components.

Using AAS submodels like the Carbon Footprint, companies can communicate environmental data in a standardized way, both internally and externally. This creates a seamless data foundation across the entire value chain. Using submodels like the Carbon Footprint, companies can communicate environmental data in a standardized way – both internally and externally, enabling them to integrate data from purchased components.

Three key takeaways:

- Sustainability starts with engineering, where crucial decisions are made.

- Standardized data formats enable the integration of environmental data into the product lifecycle.

- With IT tools like CONTACT Elements Sustainability Cloud, companies can not only plan eco-friendly operations but also implement sustainability early in the development process.

Conclusion

Developing sustainable products is no longer a vision for the future – it’s a reality today. Companies that adopt the right tools at an early stage and rely on standardized processes gain not only ecological advantages but also economic benefits.

Embeddings explained: basic building blocks behind AI-powered systems

With the rise of modern AI systems, you often hear phrases like, “The text is converted into an embedding…” – especially when working with large language models (LLMs). However, embeddings are not limited to text; they are vector representations for all types of data.

Deep learning has evolved significantly in recent years, particularly with the training of large models on large datasets. These models generate versatile embeddings that prove useful across many domains. Since most developers lack the resources to train their own models, they use pre-trained ones.

Many AI systems follow this basic workflow:

Input → API (to large deep model) → Embeddings → Embeddings are processed → Output

In this blog post, we take a closer look at this fundamental component of AI systems.

What are embeddings?

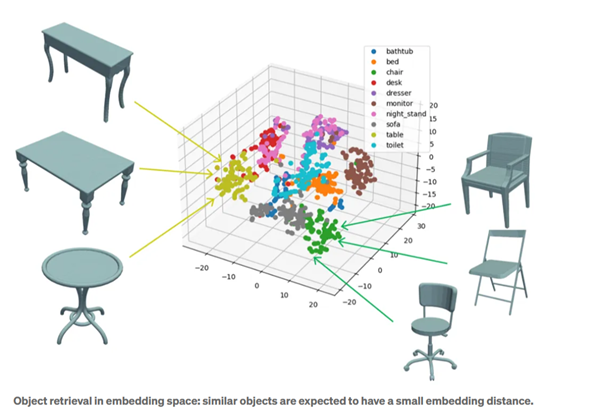

Simply put, an embedding is a kind of digital summary: a sequence of numbers that captures the characteristics of an object, whether it is text, an image, or audio. Similar objects have embeddings that are close to each other in the vector space.

Technically speaking, embeddings are vector representations of data. They are based on a mapping (embedder, encoder) that functions like a translator. Modern embeddings are often created using deep neural networks, which reduce complex data to a lower dimension. However, some information is lost through compression, meaning that the original input cannot always be exactly reconstructed from an embedding.

How do embeddings work?

Embeddings are not a new invention, but deep learning has significantly improved them. Users generate them either manually or automatically through machine learning. Early methods like Bag-of-Words or One-Hot Encoding are simple approaches that represent words by counting their occurrences or using binary vectors.

Today, neural networks handle this process. Models like Word2Vec or GloVe automatically learn the meaning of and relationships between words. In image processing, deep learning models identify key points and extract features.

Why are embeddings useful?

Because almost any type of data can be represented with embeddings – text, images, audio, videos, graphs, and more. In a lower-dimensional vector space, tasks such as similarity search or classification are easier to solve.

For example, if you want to determine which word in a sentence does not fit with the others, embeddings allow you to represent the words as vectors, compare them, and identify the “outliers”. Additionally, embeddings enable connections between different formats. For example, a text query also finds images and videos.

In many cases, you do not need to create embeddings from scratch. There are numerous pre-trained models available, from ChatGPT to image models like ResNet. These can be adapted accordingly for specialized domains or tasks.

Small numbers, big impact

Embeddings have become one of the buzzwords in AI development. The idea is simple: transforming complex data into compact vectors that make it easier to solve tasks like detecting differences and similarities. Developers can choose between pre-trained embeddings or training their own models. Embeddings also enable different modalities (text, images, videos, audio, etc.) to be represented within the same vector space, making them an essential tool in AI.

For a more detailed look at this topic, check out the CONTACT Research Blog.