Reducing greenhouse gas (GHG) emissions is crucial in the fight against climate change. Many companies face the challenge that indirect emissions in their value chain, so-called Scope 3 emissions, are often the largest contributors. Since these emissions fall outside the direct control of the company, they are usually the most difficult to determine (and optimize). How can companies address these central challenges within their value chains?

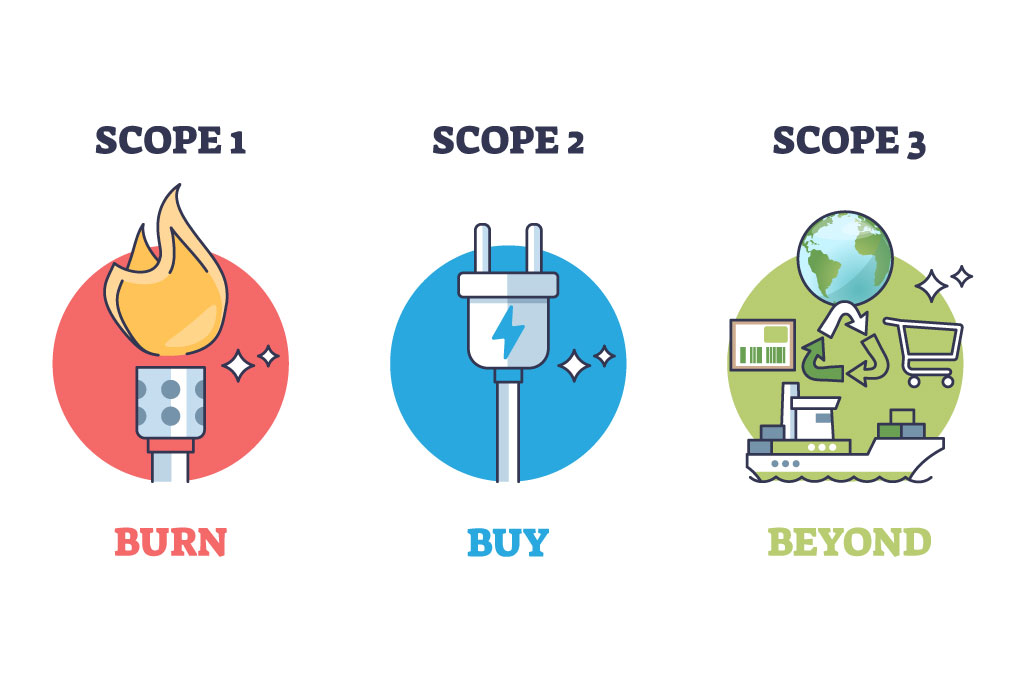

What are Scope 1, 2, and 3 emissions?

The Greenhouse Gas (GHG) Protocol classifies emissions into three categories: Scope 1 for direct emissions from company-owned sources, Scope 2 for indirect emissions from purchased energy, and Scope 3 for all other indirect emissions, including those from upstream and downstream processes within the value chain. Scope 3 is particularly important because it often accounts for the majority of GHG emissions. The GHG Protocol defines 15 categories of Scope 3 emissions that arise from both upstream and downstream activities. These include raw material extraction, production and transportation of purchased components, and the use of the manufactured products by end consumers. These emissions are difficult to capture as they are not directly under the company’s control.

Corporate Carbon Footprint (CCF) vs. Product Carbon Footprint (PCF)

There are two central approaches to calculating emissions: the Corporate Carbon Footprint (CCF), which encompasses all activities of a company, and the Product Carbon Footprint (PCF), which focuses on the lifecycle of a specific product. The PCF is particularly important when it comes to determining emissions along the value chain. Companies that aim to measure their Scope 3 emissions also need data from their suppliers regarding the PCF of the components they purchase.

Why is measuring Scope 3 emissions important?

Companies can directly influence and therefore more easily calculate Scope 1 and Scope 2 emissions. However, Scope 3 emissions should not be overlooked when aiming to assess the entire value chain. Since emissions from upstream and downstream processes often are the largest sources of GHGs, this is the only way to identify and reduce “hotspots” within the value chain.

For many SMEs, significant emissions lie in the upstream processes. However, this is also particularly relevant for industries that rely on complex and globally distributed supply chains. The automotive industry, for instance, depends heavily on purchased components and services, which significantly impact the GHG balance. According to the study “Climate-Friendly Production in the Automotive Industry” by the Öko-Institut e.V., an average of 74.8% of Scope 3 emissions occur during the usage phase, while in-house production (Scope 1 and 2 emissions) only accounts for about 1.9%, and 18.6% originate from the upstream value chain with purchased components. As the industry focuses more and more on e-mobility, the Scope 3 emissions of purchased components – and thus those from suppliers – come into sharper focus as a key lever.

Challenges in the supply chain

The pressure on suppliers to make their production more efficient and sustainable is growing, along with the need for transparency regarding the emissions of the supplied parts. Key challenges in the supply chain include data quality and availability. To tackle this and reduce greenhouse gas emissions, companies need to break new ground, ranging from material selection to production methods. A solid data foundation supports these necessary decisions, as well as the accurate documentation of emissions.

Capturing Scope 1 and Scope 2 emissions is already mandatory under the GHG Protocol Corporate Standard, while Scope 3 reporting is currently optional. However, the importance of Scope 3 reporting is increasing, as demonstrated by EU regulations like the Corporate Sustainability Reporting Directive (CSRD) and the associated European Standards (ESRS). These regulations emphasize the disclosure of emissions as a central aspect of climate action and sustainable business practices.

Three key steps to reduce Scope 3 emissions

- Optimize data management: Companies should collect comprehensive data on their products and their lifecycles to make design and portfolio decisions in favor of sustainability.

- Ensure data sovereignty and trust: Accurate calculation of Scope 3 emissions requires control over data, particularly in the context of the upstream and downstream value chains.

- Use open interfaces: Open data interfaces are essential for seamless integration and communication within the value chain. Approaches like the Asset Administration Shell (AAS) and concepts such as the Digital Product Passport (DPP) can provide valuable support.

Conclusion

Measuring and optimizing Scope 3 emissions is one of the greatest challenges for companies seeking to improve their GHG balance. By leveraging better data, optimizing collaboration within the supply chain, and ensuring transparent reporting, companies can meet regulatory requirements and make progress toward a more sustainable future.

Read a more detailed article on Scope 3 emissions on the CONTACT Research Blog.