Sustainable thinking is no longer a “nice-to-have” – regulations and customer demands have made it a central pillar of modern innovation. A growing number of companies are realizing that ecological responsibility and economic success can go hand in hand. This is especially evident in product development: where cost-effectiveness used to dominate, sustainability has emerged as another key factor.

The right balance between economic and ecological aspects

While cost and efficiency remain crucial, staying competitive in the future requires taking the environmental balance into account when making business decisions. The challenge lies in finding the right balance between economic performance and ecological responsibility. This is most successful when sustainability is considered from the very beginning – at the design stage – rather than at the very end.

Why the product development process is crucial

Around 80% of a product’s environmental impact is already determined during the development phase. Decisions about materials, manufacturing processes, energy use, and recyclability made during this stage play a decisive role. Leveraging reliable and transparent data in the decision-making process enables companies to lower the environmental impact of their products.

LCA vs. PCF: Two key terms briefly explained

Anyone involved in sustainable product development will inevitably encounter these two concepts:

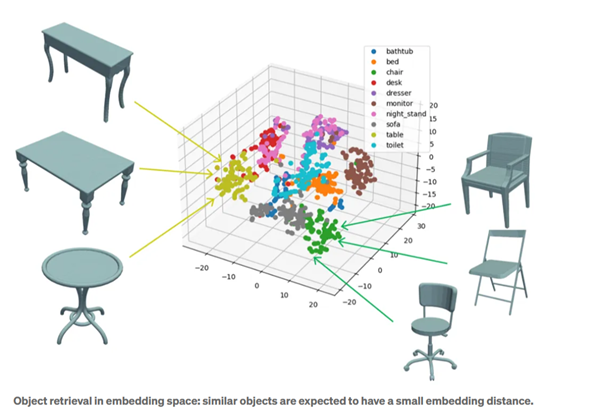

- Life Cycle Assessment (LCA): The assessment of a product’s environmental impact throughout its lifecycle, from raw material extraction to disposal.

- Product Carbon Footprint (PCF): The environmental footprint of a product, expressed in CO₂ equivalents. The PCF is often part of a broader LCA.

Implementing sustainability directly in the PLM system

CONTACT’s sustainability solution allows this environmental data to be recorded and used directly in CIM Database PLM. This enables a systematic evaluation of materials, processes, and product structures. Whether entered manually or imported automatically from environmental databases, a product’s environmental impact can be analyzed and improved directly within the system.

Asset Administration Shell: a key to data exchange in the supply chain?

Sustainability is not a solo effort. Especially for complex products involving multiple suppliers, effective data exchange is crucial. This is where the concept of the Asset Administration Shell (AAS) comes into play – a standardized representation of digital twins for industrial components.

Using AAS submodels like the Carbon Footprint, companies can communicate environmental data in a standardized way, both internally and externally. This creates a seamless data foundation across the entire value chain. Using submodels like the Carbon Footprint, companies can communicate environmental data in a standardized way – both internally and externally, enabling them to integrate data from purchased components.

Three key takeaways:

- Sustainability starts with engineering, where crucial decisions are made.

- Standardized data formats enable the integration of environmental data into the product lifecycle.

- With IT tools like CONTACT Elements Sustainability Cloud, companies can not only plan eco-friendly operations but also implement sustainability early in the development process.

Conclusion

Developing sustainable products is no longer a vision for the future – it’s a reality today. Companies that adopt the right tools at an early stage and rely on standardized processes gain not only ecological advantages but also economic benefits.